The Everyday Misinformation Project has published its second public report.

Beyond Quick Fixes: How Users Make Sense of Misinformation Warnings on Personal Messaging uncovers the multiple interpretations users have of misinformation warnings on personal messaging platforms.

This report comes at an important time, as the Online Safety Bill is currently being debated in the UK House of Lords. That bill requires social media providers to take responsibility for harmful content published on their platforms, including misinformation. However, for encrypted apps such as WhatsApp, this could potentially mean compromising end-to-end encryption in order to monitor and censor messages, something Meta says it is not prepared to do.

Retaining end-to-end encryption is important for privacy. But as the current debate about the bill reveals, it poses problems for tackling misinformation on messaging platforms where misleading content cannot be automatically flagged, removed, or deprioritised.

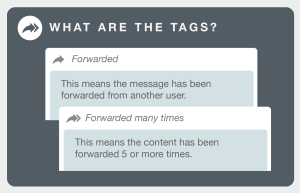

Based on a qualitative, context-sensitive analysis of people’s own accounts of their experiences and attitudes, we evaluated the effectiveness of the main measure in place against misinformation on personal messaging: WhatsApp’s “forwarded” and “forwarded many times” tags.

These tags aim to prompt user reflection on the source and veracity of forwarded messages. But they may not be as effective as presumed. Users in our study pointed to a range of interpretations of the tags, with some seeing them as indicating “important,” “viral” or practical content.

We argue that the corporate design choices that led to the “forwarded” tags, which were aimed at reducing user friction and avoiding priming people with negative associations between the platform and the spread of misinformation, can inhibit the effectiveness of these warnings. This is because they can give rise to ambiguous interpretations. We conclude that the connection between “forwards” and the potential spread of misinformation ought to be made more explicit to users.

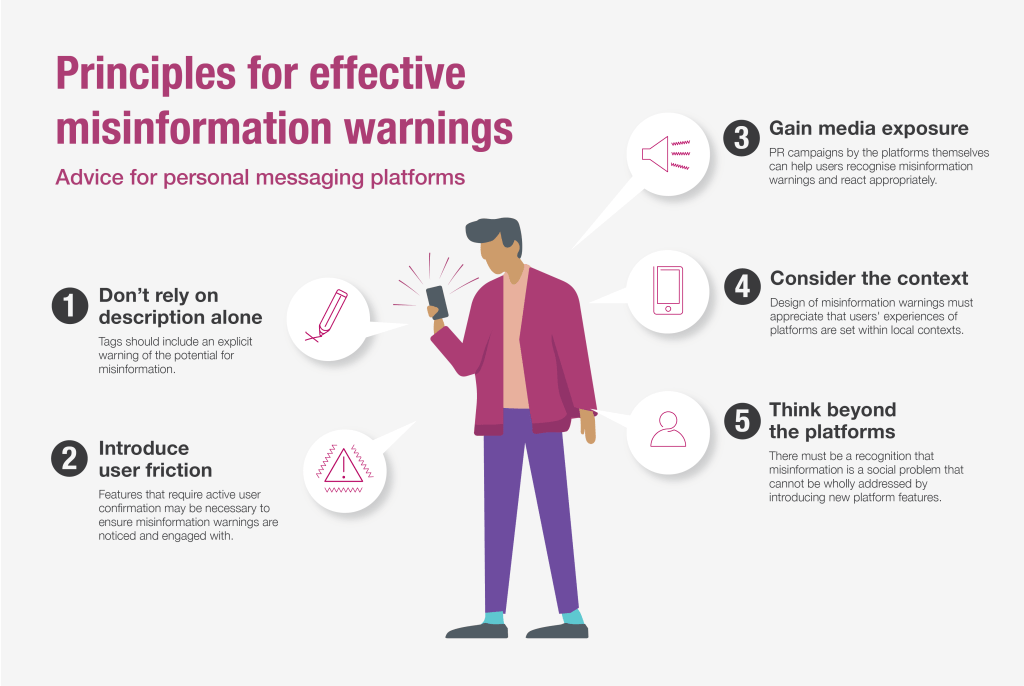

Building on these insights, the report puts forward five principles for designing effective misinformation warnings on personal messaging platforms.

The Report’s Key Findings

Associating Tags with Misinformation

The effectiveness of the “forwarded” and “forwarded many times” tags relies on an association between forwards and misinformation in the minds of users.

This association may not be strong in all contexts. It may be particularly weak for users who perceive they do not usually receive forwards.

News media coverage and publicity campaigns could help form clear awareness of the reason why the tags were introduced, to help users make sense of these measures.

Unintended Associations

Some users associate forwards with viral and unwelcome jokes because they routinely receive large amounts of this forwarded content. This may prompt dismissal of content tagged “forwarded” or “forwarded many times”, but not the intended critical engagement with its veracity or origin.

Other users associate the status of “forwarded” with more desirable characteristics. These include content that is socially valuable or contains important or useful information. A minority of our participants attributed quality or importance to content marked “forwarded many times.”

Other users may be unaware of or indifferent to the tags. This reflects a lack of appreciation of the significance of forwards for misinformation.

Five Principles for the Design of Effective Misinformation Warnings

- Don’t rely on description alone. Simply indicating that a feature such as forwarding has been used is not enough. End-to-end encryption means warnings cannot be topic based but they could still more clearly indicate the potential for misinformation than at present.

- Introduce user friction. Misinformation warnings may be ignored or overlooked unless they incorporate more intrusive designs that force the user to stop and reflect.

- Gain media exposure. Platforms should engage in publicity campaigns to spread the word about the intended purpose of misinformation warnings.

- Consider the context. Understanding the different ways personal messaging platforms are used across contexts is crucial to the design of misinformation warnings that are relevant and useful. These contexts are dynamic, as they are shaped by social norms as well as people’s relationships with others.

- Think beyond platforms. Technological features need to be combined with socially-oriented anti-misinformation interventions, focusing particularly on building social capacities to empower people to challenge misinformation and work together to use personal messaging platforms in ways that help reduce misinformation.

The report is free and open access. You can download your free copy here.

You can read the Loughborough University press release here.

This report is based on the Everyday Misinformation Project’s first and second phases. We conducted two waves of in-depth semi-structured interviews with people in three regions: London, the East Midlands, and the North East of England (wave 1 n=102, wave 2 n=80). We also asked participants to voluntarily contribute examples of both accurate and misleading information they had seen or shared on personal messaging. We recruited participants using Opinium Research’s national panel of over 40,000 people. Those taking part roughly reflect the diversity of the UK population on age, gender, ethnicity, educational attainment, and a basic indicator of digital literacy.

Online personal messaging platforms have grown rapidly in recent years. In the UK, WhatsApp has 31.4 million users aged 18 and over—about 60% of the entire adult population—and is more widely and frequently used than any of the public social media platforms. Facebook Messenger has 18.2 million UK adult users.

We thank the Leverhulme Trust for its generous financial support for this ongoing project, and the project participants for being so generous with their time.